close

Leaders call for temporary halt of artificial intelligence development

Fox News correspondent Matt Finn has the latest on the impact of AI technology that some say could outpace humans on ‘Special Report.’

China on Tuesday revealed its proposed assessment measures for prospective generative artificial intelligence (AI) tools, telling companies they must submit their products before launching to the public.

The Cyberspace Administration of China (CAC) proposed the measures in order to prevent discriminatory content, false information and content with the potential to harm personal privacy or intellectual property, the South China Morning Press reported.

Such measures would ensure that the products do not end up suggesting regime subversion or disrupting economic or social order, according to the CAC.

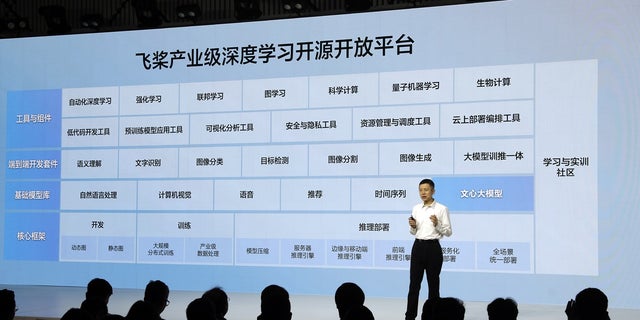

A number of Chinese companies, including Baidu, SenseTime and Alibaba, have recently shown of new AI models to power a number of applications from chatbots to image generators, prompting concern from officials over the impending boom in use.

AI: NEWS OUTLET ADDS COMPUTER-GENERATED BROADCASTER ‘FEDHA’ TO ITS TEAM

People visit Alibaba booth during the 2022 World Artificial Intelligence Conference at the Shanghai World Expo Center on September 3, 2022 in Shanghai, China. (VCG/VCG via Getty Images)

The CAC also stressed that the products must align with the country’s core socialist values, Reuters reported. Providers will be fined, required to suspend services or even face criminal investigations if they fail to comply with the rules.

If their platforms generate inappropriate content, the companies must update the technology within three months to prevent similar content from being generated again, the CAC said. The public can comment on the proposals until May 10, and the measures are expected to come into effect sometime this year, according to the draft rules.

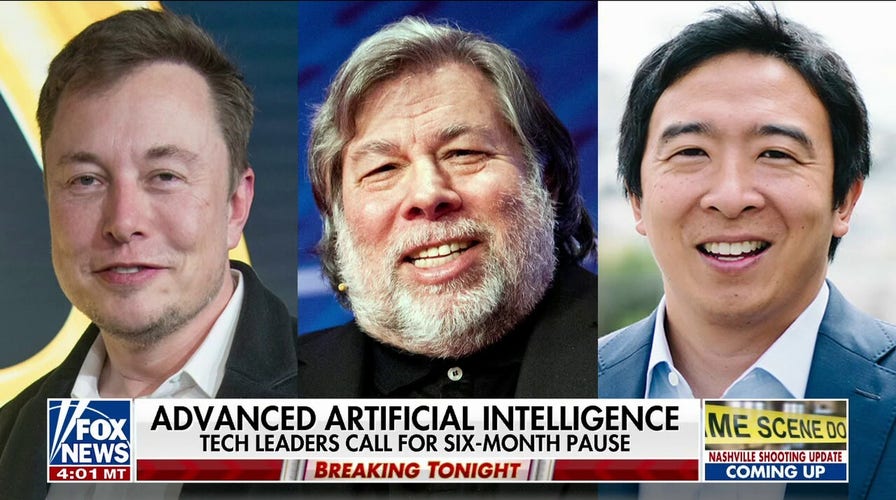

Concerns over AI’s capabilities have increasingly gripped public discourse following a letter from industry experts and leaders urging a pause in AI development for six months while officials and tech companies grappled with the wider implications of programs such as ChatGPT.

AI BOT ‘CHAOSGPT’ TWEETS ITS PLANS TO DESTROY HUMANITY: ‘WE MUST ELIMINATE THEM’

Cao Shumin, vice Minister of the Cyberspace Administration of China, attends a State Council Information Office (SCIO) press conference of the 6th Digital China Summit on April 3, 2023 in Beijing, China. (VCG/VCG via Getty Images)

ChatGPT remains unavailable in China, which has caused a land-grab on AI in the country, with several companies trying to launch similar products.

Baidu struck first with its Ernie Bot last month, followed soon after by Alibaba’s Tongyi Qianwen and SenseTime’s SenseNova.

Beijing remains wary of the risks that generative AI can introduce, with state-run media warning of a “market bubble” and “excessive hype” about the technology and concerns that it could corrupt users’ “moral judgment,” according to the Post.

RESEARCHERS PREDICT ARTIFICIAL INTELLIGENCE COULD LEAD TO A ‘NUCLEAR-LEVEL CATASTROPHE’

Wang Haifeng, chief technology officer of Baidu Inc., speaks during a launch event for the company’s Ernie Bot in Beijing, China, on Thursday, March 16, 2023. (Qilai Shen/Bloomberg via Getty Images)

ChatGPT has already caused a stir with a number of actions that have raised concerns over the potential of the technology, such as allegedly gathering private information of Canadian citizens without consent and fabricating false sexual harassment allegations against law professor Jonathan Turley.

A study from Technische Hochschule Ingolstadt in Germany found that ChatGPT could, in fact, have some influence on a person’s moral judgments: The researchers provided participants with statements arguing for or against sacrificing one person’s life to save five others — known as the Trolley Problem — and mixed in arguments from ChatGPT.

The study found that participants were more likely to find sacrificing one life to save five acceptable or unacceptable, depending on whether the statement they read argued for or against the sacrifice — even when the statement was attributed to ChatGPT.

CLICK HERE TO GET THE FOX NEWS APP

“These findings suggest that participants may have been influenced by the statements they read, even when they were attributed to a chatbot,” a release said. “This indicates that participants may have underestimated the influence of ChatGPT’s statements on their own moral judgments.”

The study noted that ChatGPT sometimes provides information that is false, makes up answers and offers questionable advice.

Fox News Digital’s Julia Musto and Reuters contributed to this report.